LimaRP-Dolphistral-7B (ChatML, 8-bit LoRA adapter)

This is a version of LimaRP specifically finetuned for Dolphin-2.0-Mistral-7B, using about 1900 conversations up to 8k tokens length and Sliding Window Attention (SWA). This LoRA adapter may not work as intended on the base Mistral-7B-v0.1.

For more details about LimaRP, see the model page for the previously released v2 version for Llama-2. Most details written there apply for this version as well. Generally speaking, LimaRP is a longform-oriented, novel-style roleplaying chat model intended to replicate the experience of 1-on-1 roleplay on Internet forums. Short-form, IRC/Discord-style RP (aka "Markdown format") is not supported yet. The model does not include instruction tuning, only manually picked and slightly edited RP conversations with persona and scenario data.

Prompt format

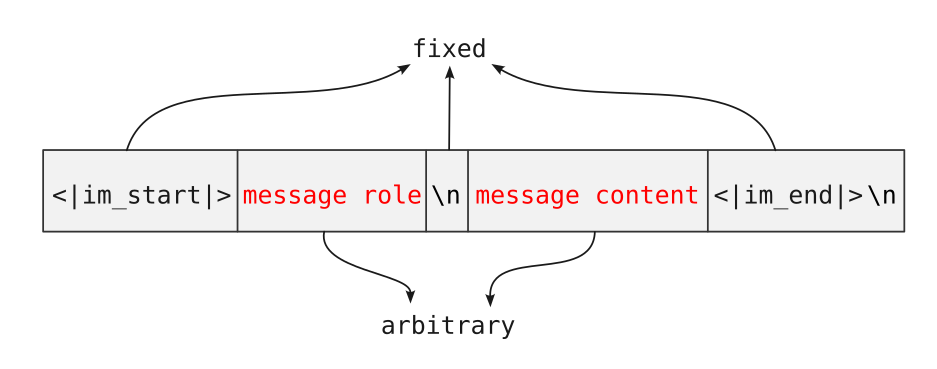

LimarRP-Dolphistral uses ChatML without special tokens and LimaRP-specific chat roles that mimick the Alpaca format. Keep in mind that ChatML roles are supposed to be flexible (as this blogpost from HuggingFace suggests); they are not intended to just follow the "user-assistant" paradigm. Chatting front-ends will have to keep this in consideration (or some sort of standardization will need to occur in the future for chat roles, without getting stuck on user and assistant).

[...] We think the closest thing to a 'standard' for formatting is the ChatML format created by OpenAI. If you're training a new model for chat, and this format is suitable for you, we recommend using it and adding special

<|im_start|>and<|im_end|>tokens to your tokenizer. It has the advantage of being very flexible with roles, as the role is just inserted as a string rather than having specific role tokens. If you'd like to use this one, it's the third of the templates above, [...]

<|im_start|>system

Character's Persona: {bot character description}

User's Persona: {user character description}

Scenario: {what happens in the story}

Play the role of Character. You must engage in a roleplaying chat with User below this line. Do not write dialogues and narration for User.<|im_end|>

<|im_start|>input

User: {utterance}<|im_end|>

<|im_start|>response

Character: {utterance}<|im_end|>

(etc.)

You should:

- Replace all text in curly braces (curly braces included) with your own text.

- Replace

UserandCharacterwith appropriate names.

Message length control

Inspired by the previously named "Roleplay" preset in SillyTavern, with this version of LimaRP it is possible to append a length modifier to the response instruction sequence, like this:

<|im_start|>input

User: {utterance}<|im_end|>

<|im_start|>response (length = medium)

Character: {utterance}<|im_end|>

This has an immediately noticeable effect on bot responses. The lengths using during training are:

micro, tiny, short, medium, long, massive, huge, enormous, humongous, unlimited.

The recommended starting length is medium. Keep in mind that the AI can ramble or impersonate

the user with very long messages.

The length control effect is reproducible, but the messages will not necessarily follow lengths very precisely, rather follow certain ranges on average, as seen in this table with data from tests made with one reply at the beginning of the conversation:

Response length control appears to work well also deep into the conversation. By omitting the modifier, the model will choose the most appropriate response length (although it might not necessarily be what the user desires).

Suggested settings

You can find Instruction and Prompt/Context presets in the repository.

Text generation settings

Like the base Mistral-7B-v0.1, repetition issues still persist. A low temperature combined with a relatively high repetition penalty and low repetition penalty range may help. Otherwise, normally a starting point could be as follows:

- TFS = 0.90

- Temperature = 0.70

- Repetition penalty = ~1.1

- top-k = 0 (disabled)

- top-p = 1 (disabled)

Training procedure

Axolotl was used for training on a 1x NVidia A40 GPU graciously provided by Arc Compute.

The model has been trained as an 8-bit LoRA adapter, and it's so large because a LoRA rank of 256 was also used.

Training hyperparameters

- learning_rate: 0.0001

- lr_scheduler_type: constant

- num_epochs: 3

- sequence_len: 8192

- lora_r: 256

- lora_alpha: 16

- lora_dropout: 0.05

- lora_target_linear: True

- bf16: True

- fp16: false

- tf32: true

- load_in_8bit: True

- adapter: lora

- micro_batch_size: 1

- gradient_accumulation_steps: 16

- warmup_steps: 0

- optimizer: adamw_bnb_8bit

Loss curves

Loss values are significantly higher than normally seen because the training procedure is performed on the entire sequences. The training was also started with a higher learning rate, but then it was decreased after 0.5 epochs since it seemed too high.

Train loss

Eval Loss